Charities can derive numerous benefits from the creation and adoption of digital products and services.

By using digital tools to develop, deliver or augment services, charities can experience improved operational efficiency, increased accessibility and usability of services, expanded outreach to marginalised communities, and a more significant overall impact.

Assessing and evaluating a digital product or service often necessitates a distinctive approach compared to the traditional evaluations of in-person services.

Within this guide, we explore key considerations in relation to monitoring and evaluating digital products and services.

In 2023, Catalyst supported the evaluation and research consultancy inFocus to explore the approaches to monitoring and evaluating a digital product or service, and how different stakeholders can adapt accordingly.

The research included a desk-based review of existing resources, in-depth interviews, and a 1-day workshop with 22 evaluators and representatives from charities, digital agencies, and funders working across the charity sector.

Who is this guide for?

This guide provides advice on the evaluation of digital products and services for four key stakeholders involved in their development.

Structure of this guide

This guide is broken down into three sections.

Agile approaches

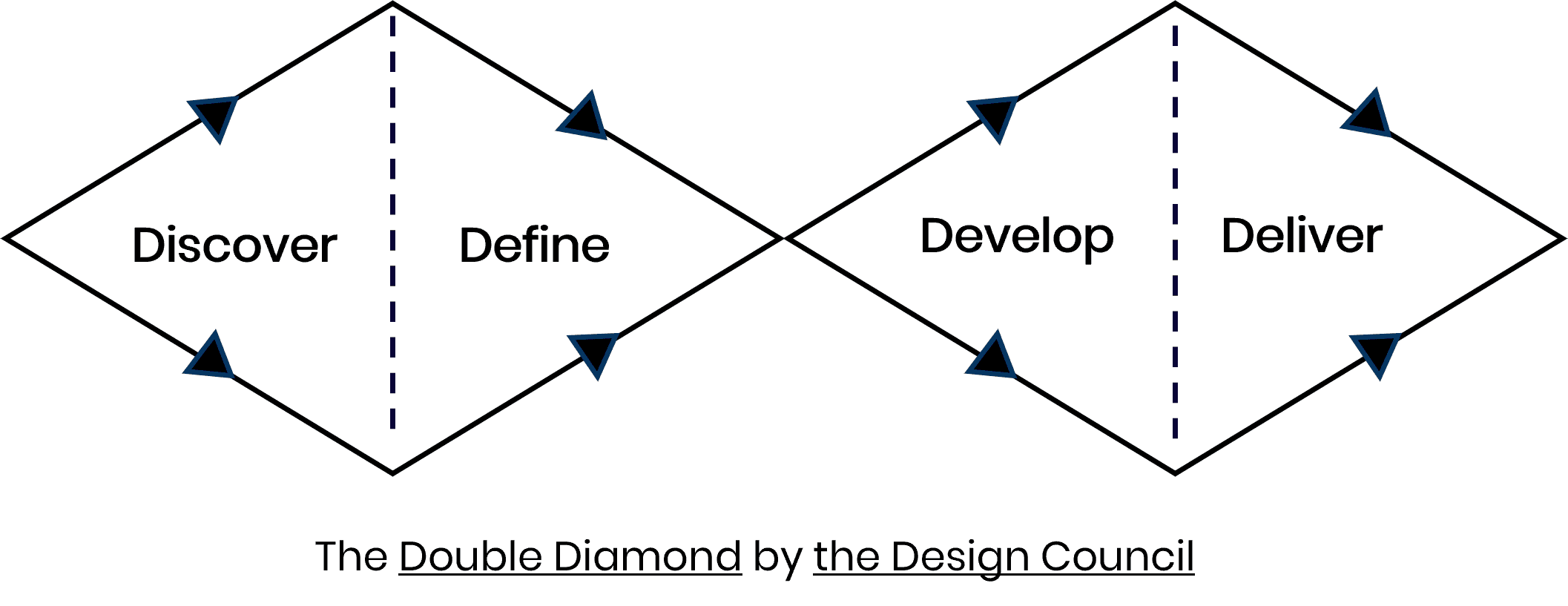

Often, charities will follow an ‘agile’ or iterative approach to developing a digital product or service (see the Design Council’s ‘Double Diamond’ process below). Agile approaches to managing software development projects focus on continuous releases and customer feedback (source: Atlassian). This means that outputs and projected outcomes often change as development pivots.

User research

In agile development, assumptions about outputs and outcomes can be tested through user research (e.g. user interviews or testing) and adjusted to meet the needs of the product or service users. A charity might discover that its original intention for developing a digital product or service doesn't align with user preferences, or that an 'off-the-shelf' or non-digital solution might be preferable.

Conventional evaluations typically involve defining outputs and outcomes at the project's outset. But for digital products and services, this approach can prove overly rigid. For instance, funders may require a comprehensive outcomes framework right from the start of development, emphasising predefined targets that may limit adaptability and hinder the agile learning process.

We'll delve further into the limitations of traditional monitoring and evaluation approaches when applied to digital products and services later in this guide.

Get on the same page with digital: Ensure that all stakeholders are familiar with digital development practices and terminology, such as 'agile' and 'user research', before commencing any evaluation (or the development itself). The scale of this exercise will depend on various factors (e.g. if the charity has in-house digital expertise or is digitally advanced).

Define success: Discuss and agree on what a successful project outcome would look like to help manage expectations. For example, agreeing that a development pivoting from original plans in line with user research is a positive result (rather than a failure), provided a robust process is followed.

Tell stories about digital: Seek out case studies or stories from charities that have undergone a digital development journey. This can aid in setting expectations around the digital development process and highlighting what to anticipate from an evaluation, especially if there have been negative experiences with digital developments in the past.

Stage of development

What to measure and evaluate is often dependent on the stage of development of a digital product or service.

For example, in the discovery stage, we might explore to what extent we have been successful in identifying the needs of a particular audience…

…while it may not be possible to measure the social value of a project or service until it is stable and into the delivery stage (if it gets this far).

Measuring social value

"

When you’re shaping a digital product, you build it not just for the sake of building it, which I’ve seen some organisations do, because they just want to keep up with tech, and that’s not a good approach. Instead, It has to be measured alongside their nondigital project goals. Say, for example, you are building a chatbot, which helps signpost people to support services. It’s also important to see what is your overall outcome in that, what are you trying to get people to do? If, for example, you are trying to reduce the the pressure on people who work in the service, then that has been measured on that level, not just the impact that it’s having in terms of getting people to services faster. I feel like the product isn’t the end goal, but the changes it brings with it. Unfortunately, I think that’s not what happens a lot with evaluations.

Nish Doshi

6 things you could measure

1

Social value: As described on the previous page, social value often takes time to emerge, but can sometimes be measured in the earlier stages of the development of a digital product or service. For example, a user group testing a digital application in the development or delivery phase could increase their knowledge about a particular topic.

2

User value: The perceived benefit and satisfaction that users gain from its features, functionalities, and overall experience. User value can also act as a proxy for longer-term social change; if users repeatedly access a product or service and leave positive reviews, they are likely to be benefiting.

3

Learning: What learning has emerged from testing assumptions about the product or service, and what actions have been taken based on the learning?

4

Financial value: This might include the cost savings to a charity and/or overall financial sustainability from creating the digital product or service.

5

In-house expertise: Is there capability and capacity to deliver and continue to run the digital product or service? Think about in-house expertise or partnerships with digital agencies.

6

Quality of processes: You could measure the quality of the software development process. For example, how well have assumptions been tested? How comprehensive was the user research? To what extent have adjustments been made based on lessons learned? How effectively did stakeholders collaborate?

Other measures to think about

Value to the wider sector

Explore the potential for other organisations to reuse the product or service. Have steps been taken towards this, such as implementing a digital commons license? Have organisations operated transparently and shared their learning? Have partnerships been established that could benefit the wider sector?

Where the digital product or service fits?

It’s also important to consider where the digital product or service fits within the service offering of a charity. Is the digital product or service focused on increasing the reach of an in-person service, or providing an online alternative to an in-person service to improve accessibility? If so, measuring reach and access might be a priority.

"

Just thinking about this in the context of digital inclusion. I think there’s something about digital products and services which might cater towards a different audience that might not be comfortable using phone calls, for example. I think there is value in that. But there’s also the aspect that not everyone has access to digital. So it shouldn’t be the only product or service, but part of a broader project using multiple ways for people to engage. So, I think it’s really important in the evaluation that it’s not just a product that’s measured on its own impact, but also in its position within a broader project Evaluation.

Annie Caffyn

Unintended negative impacts

Has the digital product or service widened the digital divide by inadvertently excluding individuals or communities without access to technology or the necessary digital skills, for example?

Consequence scanning can help to foresee potential negative impacts.

Unintended positive impacts

For example, staff at charities may acquire new skills in user research or agile project management that they can apply to other projects, whether digital or non-digital. They might also gain insight into developing or updating a digital strategy.

Consider these key points when evaluating a digital product or service in the midst of development or involving an agile development process.

1

Avoid producing (or requesting, if a funder) a detailed set of social outcome measures at the start of a project, as the project could pivot away from this.

2

A theory of change or topline set of outcomes can be helpful as a framework to understand why something exists but should not necessarily be used to develop a measurement framework at the outset.

3

Setting more regular points of contact across an evaluation (measuring little but often) can provide more rapid and useful learning. Data collection tools could also be adapted regularly to take account of ongoing learning.

Developmental evaluation is a useful reference point, with its focus on real-time ongoing feedback and evaluators taking the role of a ‘critical friend’ to charities.

This can be particularly helpful for charities going through a digital development process for the first time, as they may benefit from empathy and support.

Developmental Evaluation (DE) is an evaluation approach that can assist social innovators develop social change initiatives in complex or uncertain environments.

DE originators liken their approach to the role of research & development in the private sector product development process because it facilitates real-time, or close to real-time, feedback to program staff thus facilitating a continuous development loop.

Developmental evaluation - betterevaluation.org

While outcomes measurement through more traditional methods (e.g. online surveys) may be possible in the early stages of development, it won’t often be possible again until after delivery, when the digital product or service is more stable. In the earlier stages the following combination is more common:

Qualitative evaluation methods

Interviews, online focus groups and learning workshops can help charities reflect back on the process of developing a digital product or service and pick up on any unintended outcomes.

Usage statistics (e.g. via Google Analytics)

Look at site visits and user engagement metrics that measure performance and user behaviour. Evaluators should ensure they are comfortable with accessing and interpreting this type of data.

Overall, try and build data collection into the development and design process (e.g. registration questions, usage data or feedback gathering) rather than seeing evaluation as a separate process.

Thank you!

Thank you for taking the time to read through our guide, we hope it was helpful.

We are now working on a new Catalyst project to develop a toolkit that will go into more detail with indicators (specific pieces of data) and tools you could use to measure digital products and services.

If you would like to get early access to the toolkit later in 2024 you can register here.

Feedback

This is the first version of the guide. Help us improve it! Please share your thoughts in the feedback form below.